Hi all,

Here's todays EuroSTAR newsletter. Let me know if you're attending the conference this year, would be good to meet up.

Wednesday, 19 October 2011

Friday, 14 October 2011

Friday, 7 October 2011

Roller-coaster of emotions and my theory of evolution

Hi all, thanks for everyone who voted. Unfortunetely (for James Bond & Co.), the License to Test video didn't win.

"One doesn't realise how competative one is until one loses at something one wants to win."

After crying myself to sleep a number of nights, I can safely say I'm over it :)

Congratulations to the SIGMA QT behind the 'Quality Never Sleeps' film. It was a fantastic video with some funny one-liners! Here it is if you haven't seen it before:

Here's a cartoon first printed in STC's The Testing Planet

"One doesn't realise how competative one is until one loses at something one wants to win."

Andy Glover, Today

After crying myself to sleep a number of nights, I can safely say I'm over it :)

Congratulations to the SIGMA QT behind the 'Quality Never Sleeps' film. It was a fantastic video with some funny one-liners! Here it is if you haven't seen it before:

Here's a cartoon first printed in STC's The Testing Planet

Thursday, 29 September 2011

Vote Now! TeamSTAR comp for EuroSTAR

The voting for the TeamSTAR competition is now open for today only (Thursday 29th) till 4pm BST.

If you like my cartoons, I'm assuming most of you do since you're reading this, I would appreciate if you could vote for the License to Test video! It should only take 1 or 2 minutes of your time. Just click on the image below:

Do check out the other videos, they're cool!

Many, many, many thanks!

Today's cartoon was first published in STC's The Testing Planet. I must thank Terry Bonds for spotting a typo (thanks Terry!)

If you like my cartoons, I'm assuming most of you do since you're reading this, I would appreciate if you could vote for the License to Test video! It should only take 1 or 2 minutes of your time. Just click on the image below:

Do check out the other videos, they're cool!

Many, many, many thanks!

Today's cartoon was first published in STC's The Testing Planet. I must thank Terry Bonds for spotting a typo (thanks Terry!)

Monday, 26 September 2011

A James Bond Film

A team of testers and me submitted a video for this year's EuroSTAR TeamStar competition.

Hope you like it!The team members are: Adam Brown (@brownie490), Jenny Lane (@mfboots), Tim Munn (@Nottsmunster) and me (@cartoontester).

The voting for the TeamSTAR competition starts this Thursday, 29th September. I'll post again on the blog on the day with a link to the voting.

It would be great if you could vote for us (if you think the video is any good of course!)

Wednesday, 14 September 2011

Sunday, 11 September 2011

Wednesday, 7 September 2011

Bored?

There are a number of ways of getting rid of boredom in testing. One is developing an un-quenching desire to learn more and more and of the application you’re testing. So keep your eyes open and explore.

Thursday, 1 September 2011

The Plan

What is more important:

- Knowing and understanding the application you’re testing

Or

- Being able to visualise how you are going to approach the testing

I suspect the answer is it depends.

From my experience, both are required for good testing. Knowing the application, its ins and outs and having the domain knowledge should help test design. Or, from the opposite point of view, not knowing how the application works will mean you won’t know anything about (any) coverage so you’re likely to miss out on bugs.

Being able to visualise your plan of attack means you know what you’re going to test, what areas of the application will be tested and which areas you can ignore (at least for the time being). This provides a structure that you can work in. Not having this can either make the testing daunting or allow the testing to go off on tangents.

This is why I like Session Based Test Management. SBTM provides enough structure and a plan to guide the testing but still allows flexibility for the tester learns more about the application and/or finds bugs, especially if the tester is new to the application and needs to get to grips with it.

I’ve found Rob Lambert and Darren MacMillan often write about visualising their testing or ideas (using mind maps, drawings, call flows). Here are their blogs to find out more:

Tuesday, 30 August 2011

Thursday, 25 August 2011

The Cost of Automation

This cartoon was designed by Raimond Sinivee. Thanks Raimond!

Raimond had a few comments to say about the cartoon [added to post on 30-Aug-2011]

The idea behind is that, it is hard to justify automation as a testing method to managers. When it is said that we are going to automate stuff, then first answer is: “Yes!”. The answer may change when tester gives tool cost or additional working hours estimate. Manager might go: “Extra cost? I thought that we are going to save!”

They want to see direct money benefit or labor cost savings. I think this comes from assembly line type of manufacturing. There has been lot of automation and cost savings through that. Testing is not assembly line type of work – it is engineering! So if we want to compare, then we should compare tools cost in engineering, not at assembly.Automation could be taken as a helping method to cover new aspects of software that are hard or impossible to test manually. Usually these parts are not tested at all. Since nobody is testing newly covered aspects, then it is mostly extra expense to test them.

Tester job is to provide information about risks among other things. Tester tries to find ways to gather information about risks and how to level them. Sometimes a tester needs a tool to find out if the risk is covered. The result of executing a test could be that there is no problem. That is also valuable information if it cleared out a risk, but it is hard to actually calculate the amount of money that was saved. We could calculate the risk impact, but that also might be disputable and manipulated.

Tuesday, 23 August 2011

Robo-Cat

In testing we can be bombarded with the notion that test automation is best. I recently read an article saying all manual testing can be replaced with a new test automation tool – what craziness! I can’t wait for someone to say all developing and coding can be automated too!

Test automation does have its place though. When a tester is repeating a step or task while testing, it’s worth investigating if that can be automated. Sometimes, writing quick and dirty automated scripts can save a lot of time. For example, when testing a web app, I often use a record and play back tool to log into the app, once logged in, I can carry on with manual testing. I can re-do these steps as many times as needed. Once the testing is finished, I throw away the automated script. At other times, for a large testing project, it’s good to invest in a test automation framework if you expect to run the same checks over and over again as regression tests.

Yet test automation always has flaws. One major flaw is the fact the automation can only check for what it is programmed to check. For example, if it is checking the login screen is displayed, it will check for this but won’t check whereabouts on the screen it is displayed, what colours, what font it is using or whether anything else is displayed on the screen.

Another flaw is that test automation can be brittle. A developer changing the layout of a screen may generate a false positive in the test automation. The tester will need to spend some time investigating why it failed and then update the script. All this adds to the maintenance costs.

From my experience, the biggest flaw is that test automation doesn’t find many bugs, and the bugs it finds don’t tend to be critical bugs compared to those found through manual testing. The main reason for this is that test automation is typically used for regression. The application has been manually tested in the past and most of the bugs have been identified, a test automation script has been developed to give confidence that the application has not regressed. One positive use for using automation is that regression testing is still required but can be and is boring to most testers – as it’s repeating the same tests executed previously without much hope of finding new bugs.

I recently read this article from Adam Goucher about test automation heuristics. It’s a good read :)

Watch out for next cartoon from Raimond Sinivee which has a similar theme.

Sunday, 21 August 2011

Thursday, 11 August 2011

Critical

As testers, one of our main jobs is to find and report issues with the software. We are paid to find bugs. If there are bugs in the software, and inevitably there are, testers need to do what they can to uncover them.

A major problem with this is that we can come across as very negative types of people, always bearing bad news. And we don’t stop there, we also raise issues with the process, documentation, people: “Why didn’t you do any unit testing?”, “That developer doesn’t know what working in a team means!”, “This feature needs to be documented!”, “You’re not supposed to make any changes to the code now!”

One way to avoid coming across so negatively is not raising as many bugs. But that approach is short-sighted! Another way, which is far better, is to bear good news as well as bad. I know I’m not the best coder, so when a Dev delivers a build for me to test, I know that they’ve done a better job than I would have done. Having this attitude tends to make it easy to give positive feedback on the software I’m testing. Also, as a tester, I try to do my best to test, to find and report bugs, but even then I make mistakes on a regular basis, I'm only human after all. But so are developers (believe it or not!), project managers and the pointy-haired boss.

Wednesday, 3 August 2011

Coverage

Coverage is tricky. You think you got everything covered, and then, WHAM!! A bug suddenly appears. You’re forced to defend your testing and you end up saying something like: “well, when I said I had everything covered, I clearly didn’t mean E-V-E-R-Y-T-H-I-N-G!” Because testing everything is impossible. Everyone knows that, including your clients/manager/PM/developer... right?

Similarly to automation, thinking about coverage can give a false sense of security. There are so many ways software could have test coverage. Requirements coverage for example, do your tests provide 100% coverage of the requirements? What about the requirements that aren’t written down in a document? What about requirements that are documented but are ambiguous?

But I’m no expert, while Cem Kaner is, he wrote a great article on this topic, worth a look if you’ve not read it before (if you care about testing you should definitely read it). I came up with one type of coverage “requirements coverage”. Cem Kaner comes up with 101 types in the article's appendix. The next time you or your manager thinks you need 100% test coverage, think again!

Friday, 29 July 2011

Discipline

Discipline is hard. Discipline is being responsible for your own development. Discipline is not letting your manager or employer organise all your training needs. Discipline makes you stronger.

But discipline can be fun too.

Have you attended a weekend or weeknight testing event? I’ve attended a few weeknight events recently and they’re great training opportunity to develop as a tester. The events provide an environment where you need to think on your feet as a tester, practise testing techniques and where you need to articulate your thoughts from test planning to reporting issues you’ve found. All of this without the fear of failure as it really doesn’t matter if you miss a bug or say something stupid, no one is going to bring it up in your next performance appraisal. It’s a win-win-win situation (the third win is because it’s a fun event – #WIN!)

Here’s a little badge I drew for the weekend testers to celebrate their 50th event anniversary.

Tuesday, 26 July 2011

Risk Based, STC and Recruitment

Today's cartoon was first publish in The Testing Planet, issue 3.

You can see two brand new cartoons in the latest issue, which you can order from Amazon.

Just to let you know, I'm looking for a tester to work in my team, here's the job advert. Please let me know if you're interested and forward the link to other testers - many thanks!

You can see two brand new cartoons in the latest issue, which you can order from Amazon.

Just to let you know, I'm looking for a tester to work in my team, here's the job advert. Please let me know if you're interested and forward the link to other testers - many thanks!

Thursday, 21 July 2011

Just looking

When I try to explain what I do as a job, I have a nagging temptation to make it sound like I'm really clever and I do complex stuff at work, something like "I test the web application’s functionality running on handheld mobile electronic devices is displayed within the device parameters and each feature is correctly implemented according to detailed requirements and strict industry regulations". Of course, by the time I've said the 2nd word "test", I can tell I've lost their interest!

Ignoring the fact that we sometimes try to exaggerate our skills and knowledge, I'm a firm believer that software testing, when done well, is hard. But having said that, sometimes you can find bugs in software without clicking any buttons. There are times that testing looks very simple, especially if you’re watching an experienced tester do some testing. The tester might come across as just looking or playing with the software - anyone could do that they’re doing!

I think this can similar to sports men and women who are at the top of their sport. Whenever they swing a golf club, kick a 40 yard ball, or spin a ball with great precision, they make it look so easy as if anyone could pick up the sport and be a pro without blinking. A professional goal of my mine is to be like these sports men and women but in the software testing world. Whenever I attend a meeting where they are asking for my advice, or where I'm performing a few quick tests on an application or I'm the main tester for a large project, I want my every interaction to look good yet easy. I want to test software and find bugs by just looking, or so it would seem to the onlooker.

Tuesday, 19 July 2011

That's funny

From other cartoons and following on the twitter-sphere, you may have noticed I like exploratory testing. This testing is not a technique but an approach, a mind-set to testing, it's often contrasted to scripted testing but it's not as simple as saying they are opposites.

At my last two work places, I've introduced a more exploratory style of testing, but the fact is they were already doing it without realising. What I introduced is making the exploratory testing more visible and manageable so clients could see the testing that was performed.

The major reason I like exploratory testing approach is that I believe it is a more natural style of testing. With scripted testing, especially the scripts which are highly detailed, the tester has to follow the script when executing them. By doing this, they may miss out on other bugs in the software since the script does not require the tester to check for this. This is specially the case for lay out and usability issues. Issues which in the past may not have been business critical but are now increasing becoming so. If you have an awkward, unintuitive software application, users will be turned off by it and look for alternatives. With good exploratory testing, the tester is well equipped and use heuristics or guides to remind them of areas to look at.

Cem Kaner originally came up with the exploratory testing term and James Bach has developed the approach and provides training to develop exploratory testing skills. One heuristic that has triggered many bug finds is the "that's funny" heuristic. If you are ever using or testing an application and you notice something strange or you have a gut feeling something isn't quite right, then keep searching, you might find a bug!

Friday, 15 July 2011

Peeping Toms

According to Wikipedia, the fountain of all knowledge, a scientist engages in a systematic activity to acquire knowledge. When it comes to software testing, I feel this description of a scientist applies well to the day to day activities of a tester. For example, a tester, with varying degrees of structure, will systematically test the software, through these actions, the tester will learn how the software behaves, so acquiring knowledge. From this new knowledge, the tester can assess if there are any issues which he raise to the project team.

At school, during my science lessons, the teacher will ask the class to come up with an hypothesis, we would then have to test this hypothesis to confirm or reject it. Again, this isn't very different at all to testing. For example, when testing a login screen, I might come up with an hypothesis that the login screen will accept my username without a password. I would then test this out to check if my hypothesis is right (hopefully I'll be wrong!). If time permits, I could create new hypothesis and continue to learn about the software I'm testing. It might do these actions in parallel, I come up with hypothesis and test them out at the same time (a more exploratory style of testing) or I might write the hypothesis down, as in test scripts, and execute these tests at a later date. Either way, it's testing using a hypothesis to drive the testing.

Another aspect of using scientists as an analogy to testing, is the fact the they need to keep many variables constant. In testing we often do this, sometimes without being aware of it. When first testing the login page, let's say it's a web app, we would keep the browser and version constant to test the main features. If it works on one browser, we may choose to test on other browsers, or possibly try out some performance testing by adding the number of users already logged or trying to log into the web application.

James Bach came up with a short definition for testing: "testing is questioning software". By questioning and gathering the answers from using the software, we learn more about the software and where the bugs may be hiding. Here’s a definition of my own (taken from Mr Koestler), “Testers are the peeping toms at the keyhole of software”, ok, it’s not as good, but hopefully the point comes across J

Wednesday, 13 July 2011

Busy

I'm a person who loves the technical side of testing. When allowed, I like to get under the cover and look at the code I'm testing. Not that I can always understand it, but the code may trigger new testing ideas to investigate.

On a similar vein, I enjoy using test automation, I particularly like to write code for automation. In my current post, I've been given the opportunity to learn Selenium, an open source web based test automation tool, I'm using C sharp and nUnit to drive the testing. It's been fantastic! I love it! It gives me great pleasure to see lots of checks flying through the web app under test. I say 'checks' because that's what they are, lots of checks. If I code a check for the title of the web browser, it will check the title of the web browser, not anything less nor anything more.

You may already know that test automation would never replace the testing capabilities of a human being, especially a human being who knows how to test. This is something I need to be reminded of on a regular basis. Since I love test automation, I could spend days writing code for a sharp looking automated regression test and forget to spend a reasonable amount of time testing new functionality, highly used features, critical areas of the software.

As a tester, it's important I continually assess the benefit of the work I'm doing. Is what I'm doing the best thing or should I be doing something else right now? A problem with automation is it can provide a false sense of achievement. Like I said, it's just checking, and often doesn't find as many defects compared to manual testing (the last sentence was an understatement). Michael Bolton was the first person to highlight and describe the differences between checks and tests. You can read more about the differences on his blog posts.

Monday, 11 July 2011

Become your enemy

In the world of software testing, there are many testing techniques. The primary objective of a testing technique is to help the tester do some actual testing. Every technique has its strengths and its weaknesses. Some techniques can help decide who does the testing (e.g. unit/user/beta testing), other techniques can help identify which areas to test, but not one technique can help in every way.

One technique that gets a lot of air time in the software testing industry is Risk Based Testing (RBT). There are two ways that RBT is used and described. One is where you have a set of tests in mind and you use a risk approach to prioritise the tests. The higher the risk, the higher the priority and the sooner you execute the test. The second way is when the tester (or project team) generate a set of risks that may apply to the application under test, and then they test the application to see if the risk does apply (please Google RBT if you want a longer and better description). The thing that annoys me about RBT is that some people think it’s the be end and end all. It’s not, it’s just a technique, an approach, it has its strengths, but it also has weaknesses. For example, it’s not very easy to get a reliable idea about feature or code coverage using RBT. You might have good coverage on the risks, but you may be concentrating on one area of the functionality. The risks themselves are heavily influenced by the people or testers analysing the risks, so you could end up with a narrow focus (tunnel vision) of the risks. Another weaknesses is that RBT doesn’t tell you how to actually do the testing – should it be heavily scripted or use a more exploratory approach. Also, who’s to say the risks are realistic, maybe the risks are too farfetched.

With that in mind, I want to introduce the perfect software testing technique. As far as I know (and I know a lot), this technique has no weaknesses, it is pure strength! The technique is called “Know your bugs” © 2011.

With this technique, the tester images or visualises how a bug could appear in the application (the tester becomes the bug). With this in mind, the tester executes a test to confirm or reject the bug exists. Pretty simple really. And please don’t confuse this technique with RBT, it’s totally different.Wednesday, 6 July 2011

Anxiety

"Why didn't you find this bug?", "Why didn't you test that?" These questions can drive a tester to madness with worry, stress, fear, the sort of feelings that often get tagged as negative feelings, the ones to avoid. But feelings aren't negative or positive in themselves, is how you react to them.

As a dad, there are times when I worry for the safety of my children. Some of these worries can seem very real, and the worry quickly changes into a fear. Typically, this changes how I act or react as a father. I would hold on to my child a little more tightly. I would keep an eye on them a little bit longer. I would instruct them a little more clearly. All this is good, in fact, is in our genes. As parents, we want the best for our children, This includes their safety, as well as their education, security, etc. The trick, and it's a very hard trick to get right, is knowing where the parenting stops and the independent child is set free! That's something I will need to learn.

In software development, the worries and fears are altogether different to parenting, but worries and fears they still are. There is no excuse for a toxic working environment, where management in-still a blame culture. But even in healthy working environments, testers, including myself, can feel worried that, once the product is in Live, a bug will pop its ugly head when it could've been spotted during development. It's how we react to these thoughts that will indicate how we're going to survive as testers. Are we going to be down-and-out testers? Or live-for-another-day testers?

When you start your next test project, when preparing the test planning or attending the first project meeting, ask yourself "how do you want to feel the moment the software is put Live?" My response is "A modest confidence I did my best as a tester". From here, I would go and learn about the application, ask questions, be a team player, do my upmost to find important bugs quickly and communicate my findings to the project team.

My final point goes to those who don't ever feel worried: one day you might wake up… just saying…

Tuesday, 5 July 2011

Fishing Tales

Hi one and all,

Before all that, here’s one of my favourite cartoons, it was printed in the Testing Planet at STC. I like this because of two reasons: One, it took a number of drafts before I was happy with it; two, it’s an area in testing I feel I need to improve on – selling the testing tale!

Once again I’ve failed to update this blog.

I wish I had a good reason for it, but I don’t.

As a way to get your favour back, I’ve decided to post a totaly brand new set of cartoons on this blog. The plan was to print them in an e-book and sell it through Amazon but I changed my mind!

The new cartoons are based on the experiences of cats trying to catch mice. The Cats and nice is an analogy of testing where testers are trying to find bugs, but please note, I’m not calling all testers cats nor all bugs mice. It’s an analogy, it only applies to a point. Most cats are cute, most testers are… well, you get the message.

With each cartoon I have added a quote that I think relates to testing. I got the idea of using quotes from a Twitter hashtag – #ihatequotes !!

Like all previous cartoons, feel free to use the new cartoons in presentations, but please contact me if you plan to use it in merchandise or magazines.

Alongside the cartoons, I will share some of my experiences in testing. I hope it comes in useful.

Over the coming weeks I’ll be posting the Cat and Mouse cartoons, stay tuned :o)

Before all that, here’s one of my favourite cartoons, it was printed in the Testing Planet at STC. I like this because of two reasons: One, it took a number of drafts before I was happy with it; two, it’s an area in testing I feel I need to improve on – selling the testing tale!

Tuesday, 10 May 2011

Rikard's cartoon

The EuroStar conference programme for 2011 came out today. The line-up looks very promising. At last year’s conference I got to meet many great testers. Rikard Edgren is one of them, he writes for The Testing Eye, and as far as I know, he is the only tester who has come up with a testing concept using a vegetable – Potato Testing!

Rikard came up with the design/dialogue for the following cartoon – all I did was draw it. Thanks Rikard!

I should also add an apology for not updating this blog! Sorry everyone

I started a new job at the beginning of March and with a few other things going on my brain has been on overload. But don’t fear, I can’t imagine I’ll ever stop drawing J

Friday, 8 April 2011

Testers are Ninjas

Hi all,

Tony Bruce (twitter: tonybruce77) is organising a fantastic software testing workshop event this May at London: London Tester Gathering 2011. The programme looks very promising and it's free to attend, the only bad thing is that they aren't many places left, so you better be quick and register if you want to attend.

Tony asked me to draw a cartoon for the event. So here it is! Hope you like. The theme is to do with ninjas as the Skills Matter web site which organises loads of free training events has a ninja theme to it as well.

Tony Bruce (twitter: tonybruce77) is organising a fantastic software testing workshop event this May at London: London Tester Gathering 2011. The programme looks very promising and it's free to attend, the only bad thing is that they aren't many places left, so you better be quick and register if you want to attend.

Tony asked me to draw a cartoon for the event. So here it is! Hope you like. The theme is to do with ninjas as the Skills Matter web site which organises loads of free training events has a ninja theme to it as well.

Thursday, 24 March 2011

Wednesday, 16 March 2011

Sunday, 13 February 2011

A great team

A couple of weeks ago I blogged about the cartoon tester’s 1st year. One thing I failed to mention (purposefully), is the fact that over Christmas I accepted a new software testing job at a different company. I’m really excited about the role and looking forward to the new challenges!

While deciding to accept the job, I knew I was going to find resigning and leaving my current job difficult. The reality has been much more difficult than I envisaged. I’m totally going to miss the people I work with. I’m especially going to miss my team. They are all absolutely great people and have made my job a lot easier over the several years we’ve been working together. Here’s a cartoon for my team… probably the greatest software test team there ever was…

Tuesday, 1 February 2011

Friday, 28 January 2011

What happens when Testers meet up???

Last year I attended a Tester Gathering in London that Tony Bruce organises, I was very impressed with the format and general atmosphere of the event - he's done a great job! So much so, that my colleague Adam Brown and I are now organising a similar event in Nottingham. With lots of help from STC, we are holding the first ever Nottingham STC Meetup on Feb 23rd.

- It will be free to attend and James Bach has kindly agreed to give a presentation!

- Please check Adam’s post with more details of the event.

- And register to the event at the STC meetup site

P.S. I say Adam and I are organising it, the truth is Adam is doing all the work!

Tuesday, 25 January 2011

Wednesday, 19 January 2011

the 'The Legendary' Pradeep Soundararajan

There is no doubt about it, today's cartoon was inspired by Pradeep Soundararajan. He's a bit of genius, you should check out his blog.

Recently, he emailed me about a couple of cartoon ideas. I love it when this happens, new ideas seem to trigger even more ideas. One negative side effect though, is I end up feeling like a pessimistic developer! There is no way I can meet the [cartoon] requirements, as I will understand the requirements differently to the way people think they have explained them. But not to worry, I always provide a prototype of the cartoon to check it meets some level of satisfaction/quality :o)

Anyway, this cartoon is my representation of Pradeep's idea. Thanks Pradeep!

Recently, he emailed me about a couple of cartoon ideas. I love it when this happens, new ideas seem to trigger even more ideas. One negative side effect though, is I end up feeling like a pessimistic developer! There is no way I can meet the [cartoon] requirements, as I will understand the requirements differently to the way people think they have explained them. But not to worry, I always provide a prototype of the cartoon to check it meets some level of satisfaction/quality :o)

Anyway, this cartoon is my representation of Pradeep's idea. Thanks Pradeep!

Friday, 14 January 2011

Tuesday, 11 January 2011

1 Year Anniversary

Today is the Cartoon Tester 1 year anniversary and what a year it has been!

Thank you all for following the blog and leaving comments. Initially I thought I would only run the blog for a few months, but due to the great response I decided that it was definitely worth continuing.

Some of my Highlights for the Tester Cartoon blog include:

Wow! What a list! Thank you again for all the great support I’ve received that has made the above a reality.

Recently, a few people have asked when and why I started drawing cartoons about testing. I think some of them were asking ‘Why? Why are you wasting your time drawing stupid cartoons?” but I think others were genuinely interested!

There are a number of reasons why…

One was that I wanted to get more involved in the wider testing industry. One option was to start a typical blog but I knew I wasn’t great at writing articles.

I knew that I had ‘some’ skill at drawing cartoons. I used to draw cartoons about my wife and I and our relationship together. Some of the cartoons made her laugh, so I thought I was unto something as she’s my toughest critic!

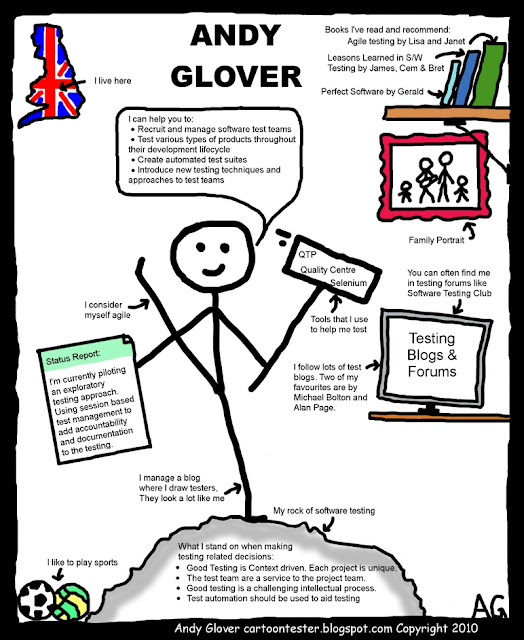

Another reason was that I wanted to improve my employability. I thought my CV/resume was boring to read, I felt I needed something extra, something a bit different.

With all this, I started drawing cartoons about testing early 2009 and ended up with around 20-30 cartoons, I submitted them all for review to the first STC magazine. I received such a great response from them that I decided to start this blog. Like they say… and the rest is history :o)

Here’s my updated CV, I had to remove some private information, but I think it looks much better than my old one…

Thank you all for following the blog and leaving comments. Initially I thought I would only run the blog for a few months, but due to the great response I decided that it was definitely worth continuing.

Some of my Highlights for the Tester Cartoon blog include:

- Over 30,000 direct visits to the site – that’s incredible :o)

- Cartoons printed in a number of magazines/newspapers/ebooks:

- STC: Issues 1, 2, 3, If I was a test case I would

- STP: Vol. 7 No. 5 and No. 6

- Many Cartoons translated into Russian

- The site is Number 32 in the charts!

- Cartoon Tester display stand at EuroSTAR 2010

- Received a hard copy of I am a bug

- Accepted into the w-a-t group.

- Got free entry to ExpoQA

- Cartoons displayed in Test Conference in Croatia

- Mr. Fails

Wow! What a list! Thank you again for all the great support I’ve received that has made the above a reality.

Recently, a few people have asked when and why I started drawing cartoons about testing. I think some of them were asking ‘Why? Why are you wasting your time drawing stupid cartoons?” but I think others were genuinely interested!

There are a number of reasons why…

One was that I wanted to get more involved in the wider testing industry. One option was to start a typical blog but I knew I wasn’t great at writing articles.

I knew that I had ‘some’ skill at drawing cartoons. I used to draw cartoons about my wife and I and our relationship together. Some of the cartoons made her laugh, so I thought I was unto something as she’s my toughest critic!

Another reason was that I wanted to improve my employability. I thought my CV/resume was boring to read, I felt I needed something extra, something a bit different.

With all this, I started drawing cartoons about testing early 2009 and ended up with around 20-30 cartoons, I submitted them all for review to the first STC magazine. I received such a great response from them that I decided to start this blog. Like they say… and the rest is history :o)

Here’s my updated CV, I had to remove some private information, but I think it looks much better than my old one…

Friday, 7 January 2011

Tuesday, 4 January 2011

Subscribe to:

Comments (Atom)